8 Million Fans Tune in to Interactive YouTube Livestream

In August 2022, an estimated eight million video game fans tuned in for a two-hour YouTube event, Game On. The online experience was billed as the streaming platform’s first interactive gaming livestream where more than sixty YouTube creators invited viewers – in English, Dutch, German, French, Spanish, and Portuguese – to add live commentary, voting for favorite players in an esports jamboree of more than a dozen games. Featured titles included the eleventh generation of the classic martial arts fighter Mortal Kombat, the 3D world-builder Minecraft, a journey into the monster labyrinths of Poppy Playtime, and more.

To brainstorm ways to present the virtual tournament in a visually engaging style, YouTube turned to the design studio Entropico. The resulting concept of a custom gaming multiverse with intersecting game environments spawned the idea for live coverage of competitors in a gaming hub, where virtual sets reflected gameplay, housed in an environment that all took place at XR Studios Hollywood location, which features a suite of tools including Megapixel VR products.

The esports arena was familiar territory for XR Studios. “We have previously been involved in esports in our work with Riot Games,” notes J.T. Rooney, President of XR Studios. “Riot is a leader in presenting huge, international esports tournaments. And in 2021 they brought in XR Studios to help them bring augmented reality to a new game called Valorant. Players had to pick a map and the level they were going to play, and as they did that an AR map appeared in between them, like a hologram on the broadcast. It was a good lead-in to this project for YouTube, and others. Esports have been great proponents of XR, using augmented and mixed reality, and entering fantastical worlds in video game design, which leans well into the technology. It was great preparation for YouTube Game On.”

The esports arena was familiar territory for XR Studios.

The Game On livestream began with a pre-show cinematic segment that introduced a helicopter-style shot of a Minecraft-like tropical archipelago, where clusters of magic kingdoms were connected on a pathway encircling an active volcano. The camera then swooped in to reveal game environments that Entropico brought to life, including a selection designated to be livestreamed from XR Studios’ LED virtual shooting studio.

“The map was part of the original creative brief,” Rooney explains. “And it was why XR Studios got involved in the Game On project. The creative company and agency Entropico had a brief from YouTube to create this map that you fly through. Their challenge was how to tie real humans into a performance, where it felt like the players were part of that video game world. XR Studios had a good solution for that because we could place people inside environments created in Unreal Engine, so the players were inside the same game engine that the game was created in, or at least extremely similar to most games. That allowed us, in a very natural way, to do what we do best at XR Studios, and that is to build a bridge to the Metaverse. There is a whole future down the road, where virtual humans will be occupying virtual environments, but right now we are the bridge and a stepping stone for putting real people in a virtual space. That was one of the reasons the client picked XR Studios and our technology. It allowed the players to feel like they were inside a video game.”

Three Video Games Brought to Life in XR-Enabled Virtual Environment

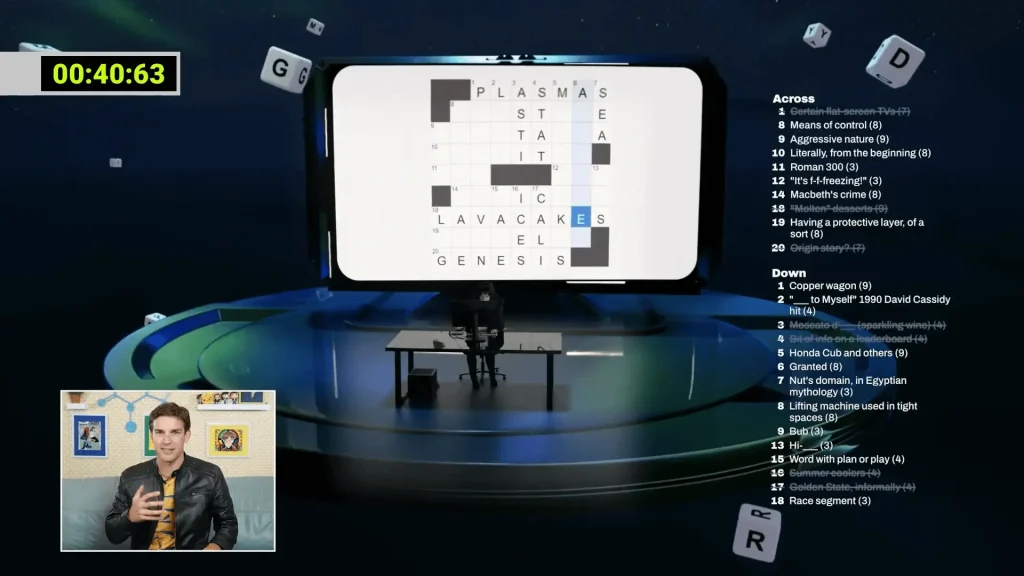

Animation and design studio Buck handled world animation of game environments for Entropico. The creative team then selected three games to highlight augmented reality gameplay that a cast of celebrity players could occupy in XR Studios’ virtual environment. The three selected games included a word puzzle, AR Crossword, where players raced a ticking clock to solve crossword clues. Poppy Playtime placed players in an abandoned toy factory while navigating labyrinths of lurking monster toys. And Mortal Kombat 11 placed gamers into graphics representing the classic mixed martial arts brawl transposed to a subterranean arena in the heart of an erupting volcano.

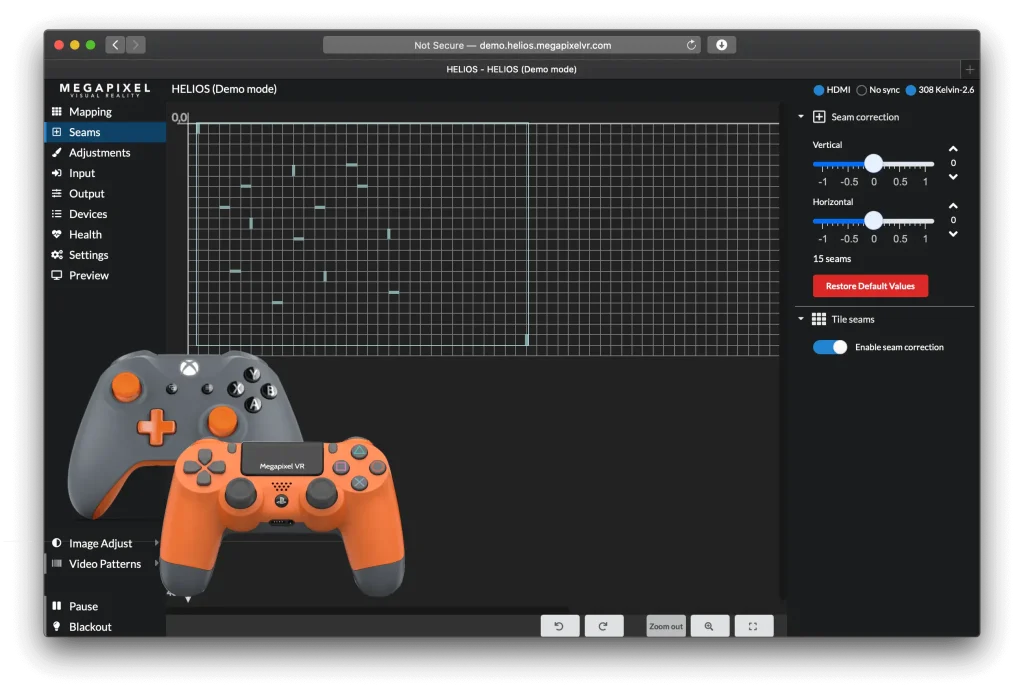

To build XR versions of each gaming environment, Entropico designers adapted game assets and then built them into a virtual set environment. Entropico then shared those worlds with XR Studios, who helped integrate them into XR’s workflow and pipeline, including Megapixel VR’s HELIOS® LED processing platform. “How XR Studios works,” explains Rooney, “we partner with creative companies and agencies, and we serve as the technical integration pipeline for the production, as well as the technical producers. Entropico had designers on their side working inside Unreal Engine. They received game assets and developed those in Unreal. They delivered those assets to XR Studios, and our team of creative technologists consulted with Entropico to make sure the assets worked correctly so that scenes weren’t upside down or the wrong scale when they played on set. Working inside Unreal Engine, in the 3D model world, is a very efficient way of getting the content to translate across.”

XR Studios’ Hollywood studio shooting environment consists of a giant curved wall and floor fitted with panels of light-emitting diodes. The rear wall of LEDs spans 54 feet wide, curves 35 feet deep, and stands 20 feet tall, providing immersive lighting within the shooting cove, combined with XR Studios’ in-house shooting facilities. “We treat our LED studio like a live production stage,” notes Rooney. “We have a lighting rig built into our ceiling at all times, so there is always a permanent setup of cameras, lighting, audio, and LEDs in the space, ready to go.”

To develop gameplay elements, Entropico creative director Joey Hunter and his associates led design teams in Los Angeles, San Francisco, and Sydney, working with YouTube and five additional design partners. Entropico then conferred with XR Studios to strategize the use of physical set-pieces within the LED environment that complemented the Unreal Engine virtual environments. “It was an interesting blend of what was physical and virtual,” says Rooney. “The team at Entropico understood the technology, so they were good at getting into conversations with us, sharing what belonged to the AR world, what served as the back plate, and what we used in LED. We had consultation calls during pre-production and previsualization. The concept was this world that they were designing existed in a full-360-degree space. We placed our LED screen in the middle of that virtual world, like a window into that world. We could move the virtual environment around, while the players remained in place. That led to more detailed conversations.

“In the Mortal Kombat world inside a giant volcano,” Rooney continues, “they wanted the human players on the LED stage to appear to be on top of a rock, so the lava flowed around them – they didn’t want it to look like players were standing on lava. XR Studios gave Entropico a template with tools and guides that they could set in place in the virtual world. That allowed us to set those environments in the correct spot for the talent when we came to shoot.”

Three directors guided shooting with gameplayer talent and worked with XR Studios Chief Technical Officer Scott Millar and XR Studios’ team of producers, managers, engineers, and lighting programmers to choreograph a multi-camera shoot to capture gameplay live, immersed in XR environments in the LED stage.

GhostFrame™Technology Allows for Multi-Perspective Filming

The LED illusion functioned similarly to the trompe-l’œil effects of sidewalk chalk drawings, which depict depth and vertiginous perspectives using 2D artistry. “The images only look correct from one angle,” Rooney explains. “That’s what we are doing with the camera for every frame in real-time. As the camera moved, it saw the LED perspective shift on screen. Shooting with multiple cameras creates a challenge. When one camera’s perspective of an image on the LED wall will look great, viewed from another camera’s perspective, the same image will look completely wrong because the other camera will be seeing that LED image from another angle. Over the years, a big focus at XR Studios for our CTO Scott Millar has been to harness the power of switching cameras – going from Camera One, to Camera Two, to Camera Three – changing the LED perspective for each camera in real-time. We have a very flexible setup that allows us to do big multi-camera broadcasts, capturing performances while we record multiple perspectives at once. We do that using Megapixel VR’s integration with GhostFrame.”

XR Studio used GhostFrame™ to layer virtual backgrounds as multiple subframe slices. “GhostFrame can subdivide each frame into however many slices the tile is capable of supporting,” explains Megapixel VR Product and Project Manager Scott Blair. “That can include chromakey, checkerboard camera tracking patterns, and their inverses. The operator can make those graphics cancel out, so those layers are not visible in person. It’s super simple to set it up. Each subframe slice is displayed as a column and the operator can click on whatever element they want to display or make invisible – that’s why it’s called ‘GhostFrame.’ It’s a powerful feature.”

The immersive technology allowed camera operators to focus on capturing on-set performances from multiple perspectives.

The immersive technology allowed camera operators to focus on capturing on-set performances from multiple perspectives within the LED environment, while LED imagery remained pinned to correct camera perspectives. “The camera operators were pretty comfortable in the space,” observes Rooney. “At our studio, we put a lot of effort into making sure the experience feels normal to them. We don’t want them to feel like they had to rethink their whole occupation walking onto the stage. We use normal cameras with normal grip equipment, zoom, and focus controls. GhostFrame allows multiple cameras to operate independently. And so the camera team doesn’t have to modify their workflow. The only thing that can get tricky is when you start layering more than a few cameras. That requires a little bit more choreography.”

XR Studios controlled the flow of LED imagery within the shooting stage, maintaining visual fidelity and color and luminance values of life-sized environmental imagery, using Megapixel VR’s HELIOS. “Our stage is built on Megapixel HELIOS,” states Rooney. “We have a large number of their processors. And we have a tight development relationship with our technical partners. Megapixel’s approach allows for use of an Application Programming Interface [API] for their partners so we could build custom tools that we needed to achieve what we were doing on stage.”

“We love working with technology partners like XR Studios who fully utilize our APIs to customize workflows,” adds Megapixel founder Jeremy Hochman. “Scott Millar and the team have put together an incredibly robust stage that’s really an immense product rather than a one-off science experiment.”

XR Studios calibrated LED screens using Xbox-style wireless game controllers, connected via Bluetooth to HELIOS, which enabled operators to dynamically navigate the LED volume to create seamless blends of light and color across the matrix of LED panels. Scott Millar’s team then implemented an 8K image workflow, which enabled XR Studios to live-stream ultra-high definition imagery to LEDs in scalable 4K segments. “One of the features that we have developed in HELIOS,” relates Scott Blair, “allows us to group sections of the screen to treat those areas as separate screens. We can control their brightness and color in real-time through lighting protocols. We’ve done a lot of work adding color tools that any colorist is familiar with, like Final Cut Pro, or the full ACES CG color workflow. And we partnered closely with XR Studios to develop specific tools for their projects.”

Directors then cued virtual action to LEDs along with enhanced reality overlays that interacted with live gameplay. “Entropico sometimes needed to choose when specific events would happen at certain times,” says J.T. Rooney. “Other effects were more environmental. We established ahead of time what elements they wanted to cue live, and what objects they wanted to sequence. XR Studios then worked with Entropico to program those parameters. For instance, in Mortal Kombat we knew if they wanted a splash of lava in the volcano we could put that on a trigger. During the shoot, that was either a live call from the director, where we could create the splash by pressing a button. Or, we could plan splashes to happen in a sequence. It functioned very much like a video game.”

Entropico and XR Studios Create Innovative Augmented Reality Effects

The monster-hunting game Poppy Playtime featured players seated in an LED environment representing the labyrinthine corridors and abandoned storerooms of the Playtime Corporation toy factory. The moody lighting set the stage for gameplay as cameras focused on players at their consoles, navigating Huggy Wuggy monsters and other creatures.

One of the more challenging Game On concepts involved devising visual motifs for the real-time word puzzle game AR Crossword. “At first, I wasn’t sure how someone would make that interesting,” confesses Rooney. “But Entropico came up with a few clever tricks that we were able to pull off together. We captured the interface of the speed-crossword players and presented that visually behind the player. Watching the players’ physical actions, and taking cues from the player’s thought processes as they were changing letters, made it quite visual. Entropico made another clever choice by adding a layer of augmented reality.” While XR Studios streamed puzzle screens to the LED walls and floor, spinning cuboid graphics drifted into view. “The foreground AR layer of large, floating crossword cubes occluded the players on the stage. That added a sense of depth. At Entropico’s request, we manually placed those cubes during the shoot, rather than generating them randomly in a particle animation system. That way, the client could direct us to make sure none of the cubes obstructed a player’s face for a long time. The player could not see those augmented reality elements, because we digitally placed the cubes on top of the camera image. If we wanted the talent to see where those objects were floating, we’d add reference monitors in front of them, or place markers on the LED floor.”

Entropico made another clever choice by adding a layer of augmented reality.

XR Studios drew on its experience of creating illusions of player interactivity from an earlier esports assignment, which had combined virtual and augmented reality elements to create play-by-plays for a first-person shooter game. “Our CTO Scott Millar did an amazing case study in 2018,” reveals Rooney. “He built a stage for an event called HP Omen. As gamers were playing, announcers could step inside the game to review the action. They could stand next to a character with his gun, fly around the scene and analyze the scene. It has the potential to be quite immersive.”

A Successful Project

From conception to production, the YouTube Game On project spanned approximately six weeks of production. After pre-production, Entropico joined the XR Studios team for one day of preparation inside the Hollywood studio, to pre-light scenes and check XR content. Entropico then conducted a single day’s shoot with celebrity players, while Bulldog Digital Media handled livestream facilities and Hovercast handled interactivity.

YouTube metrics revealed the online event clocked up more than 38,000 comments per minute, while viewers voted for gaming creators and Internet personalities that included Bella Poarch, MatPat, Jake Fellman, and Shutter Authority. “Based on the comments and the viewership,” observes J.T. Rooney, “it was well-reviewed. I think what YouTube has seen, and what other Internet sites are seeing over the last couple of years, is that a lot of the younger generation is happy to stay at home and play video games all day, on Twitch and other platforms. It’s a new form of culture, with interactions and mini-stories layered within stories that make the experience compelling. I think it was helpful for viewers during the Game On livestream to see familiar players in action – in Mortal Combat, one of the players playing inside the volcano was Bella Poarch, who is a big TikTok star and Instagram influencer. That added another layer of relatability.

“On the technical side, one of our biggest accomplishments for Game On was the application of the technology. It involved the creation of a variety of scenes that all had to take place during two shooting days in our studio. Being digital, we could push a button and change the environment to another world. Most importantly, it was the right use of the technology. It helped solve a problem, which was how to capture the experience of players within a video game environment and make that entertaining. It was a wide variety of work, but it was the right fit.”

Being digital, we could push a button and change the environment to another world.

About

Megapixel VR is an innovative technology partner dedicated to delivering fast-tracked, customized, state-of-the-art LED displays and processing to the world’s leading entertainment, film & TV, and architectural applications.

Entropico is a global creative company operating at the bleeding edges of technology, culture, and art. We solve complex business challenges with innovative solutions and flexible thinking.

XR Studios is a cutting-edge digital production company specializing in immersive technology for entertainment. Known for producing Extended Reality (XR) and Augmented Reality (AR) workflow solutions, XR Studios executes innovative experiences for some of the most renowned artists and brands across the globe.